7 Steps to Prevent Getting Blocked when Web scraping

4 min read

One aspect that terrorizes even the best Data Extraction experts is getting blocked.

But no worries, here are 7 best steps that you can use if you get blocked when web scraping data from any website:

Add Real User Agent to your program.

You need to mimic Real chrome browser operated by real human. To do this, you need to make sure that you have set real user agent in your requests.

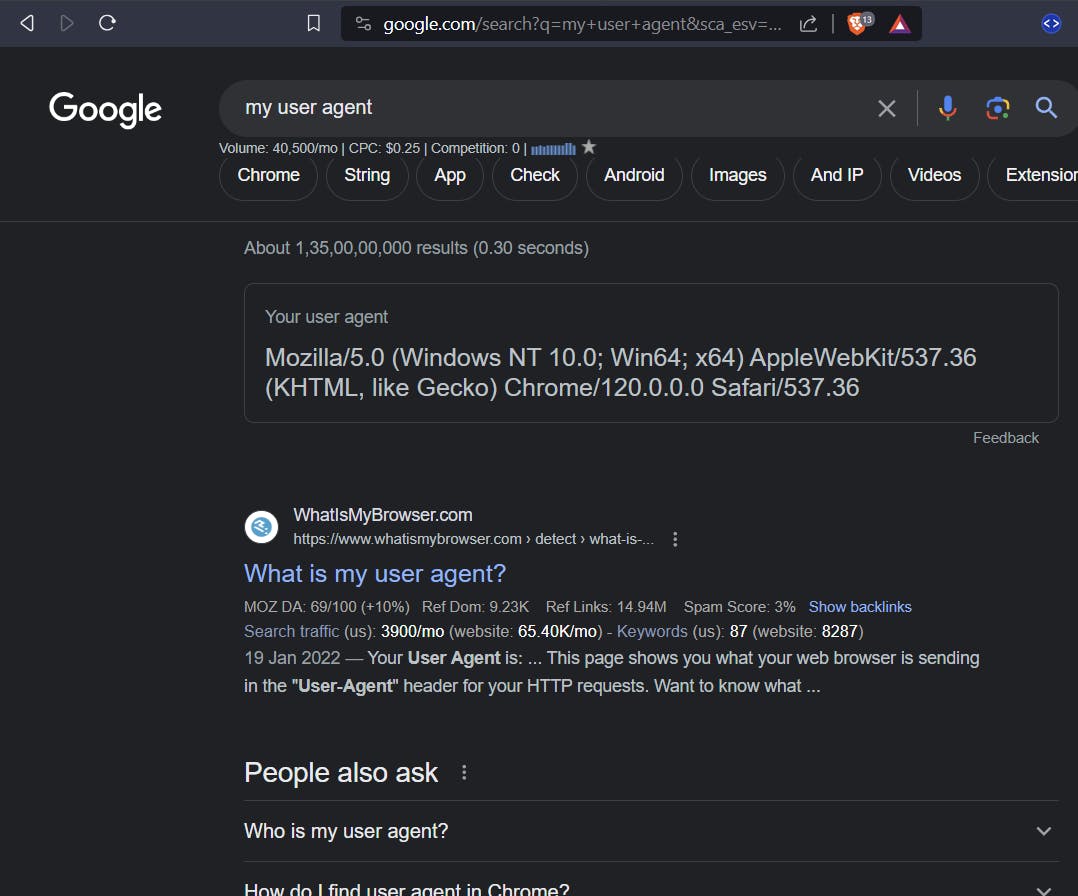

You can simply get a real user agent from google by typing "My User Agent" and using that in your request.

Selenium, Puppeteer, Splash, Scrapy allow you to easily customize the user agent when sending requests.

Scrape slowly

Some websites will block you immediately if they see you are making lots of request in a short amount of time. They usually send you 429 error code for this.

And once they block you, you cannot visit the website for a certain period of time which is usually around some minutes to 1hr. Some websites even block your IP for an entire day if they see you fetching data too fast.

Add Real Request headers in your program (all of them).

Again many of the websites check for the request headers coming from your request.

And if they see anything unusual, they block your request.

So to get unblocked, you can use real request headers when sending requests.

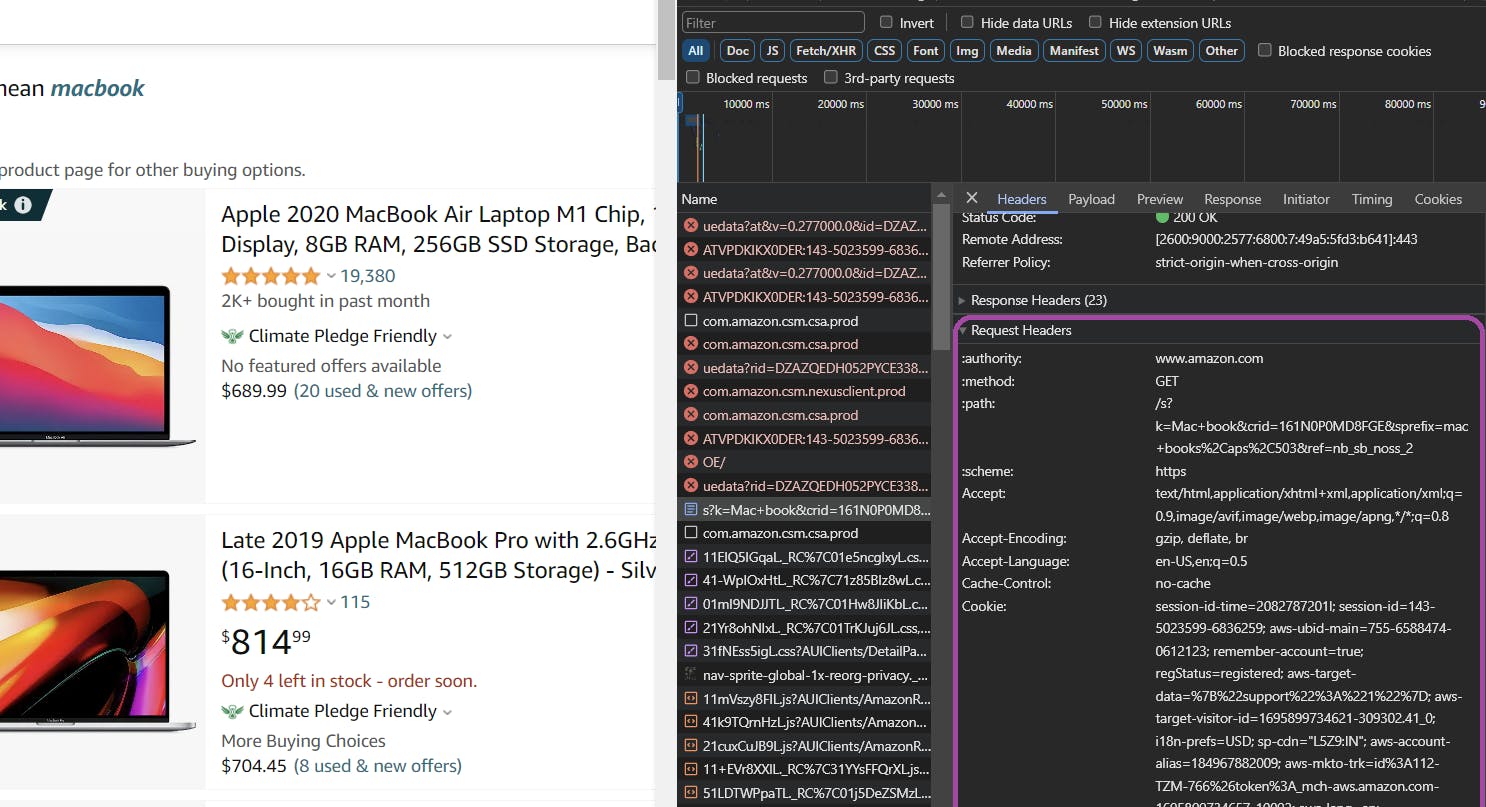

The best way to see the request headers needed for the website is opening inspect element and opening the networks tab and checking the request which has the data.

It has the request headers that you need to mimic:

You can use a tool like Postman to mimic the request and check if adding request headers can solve the issue.

Alternatively, you can also get the request headers that your real browser usually passes from here: HTTPBin and add these to your Program as request headers.

Switch to different Technology

Sometimes websites have set stronger antibot mechanisms that detect if you are using Selenium or just Requests library. So to work around that, you can use other Tech like switching from Selenium to Puppeteer or using Splash with Scrapy to get the data.

Also This is the reason why it's generally recommended to just try getting the HTML content from various pages and see if it's correctly fetching the data before creating the entire scraper. This will make it easy for you to switch to other Tech easily without spending much time on one that gets blocked later when scraping large amount of data.

Use Proxy rotation services

If you visit a website for a large number of times, it will start blocking your IP address.

To see if your IP is blocked, you can get a Free VPN, change your IP address and try seeing if you can see the webpage via your Chrome browser.

If yes, that means, you need to use Proxy rotation service to scrape large amount of data from the website.

A proxy rotation service will automatically rotate your IP address for every request.

GetOData is such a company that provides access to Premium Residential proxies and has one of the highest success rates when scraping data.

It also comes up with other Antibot bypass mechanisms so that you don't have to worry about your Requests getting blocked from any website.

Handling Captchas

Captchas are considered one of the biggest huddles when web scraping data.

But no worries, let's see how we can solve this issue effortlessly.

There are two types of captchas:

Soft Captchas: These ones show only if the website detects that you are a bot or a Program.

This ones can be solved by again going to the second step mentioned above.

Hard Captchas: These are the ones that do not care if you are a human or a bot. They are displayed every time to access the content.

The best way to solve these Hard Captchas is by using Real Human workers or AI solution that solve the captcha for us and at a very cheaper rate.

Here are the best two services that I usually use for bypassing the captchas:

2Captcha Service : https://2captcha.com/

Cap Solver: https://www.capsolver.com/

Last Resolution for Antibot Systems

Sometimes it may happen that even after doing everything you can, you may still get blocked by the website.

This can happen because websites are able to collect Thousands of your Data points like:

Device Type

The Location you are accessing the data from

The time you are accessing

Your mouse moments, typing speed

and so much more...

And if they find even one issue in them, they can block your request.

So in this case, the best way to still get the needed data is use an API that manages the antibot mechanism for us.

Here is the most powerful one in the market:

Hope you find this article useful in your Scraping journey!

Feel free to ask me any question here or on Twitter : https://twitter.com/SwapBuilds

and I will get back asap. Thanks for Reading!