Fast Scraper

Fast Scraper is a blazingly fast web scraper powered by Rust on the backend. It allows you to scrape static HTML pages extremely quickly while using only <128 MB of memory. With this scraper, you can maximize the efficiency of your credits on Apify.

What is Fast Scraper?

Fast Scraper is a blazingly fast web scraper powered by Rust on the backend. It allows you to scrape static HTML pages extremely quickly while using only 128 MB of memory. With this scraper, you can maximize the efficiency of your credits on Apify.

Why Choose Fast Scraper Over Cheerio?

Fast Scraper is blazing fast and will save you money. 🚀🚀🚀

Cheerio is powered by Node.js, meaning all the heavy lifting is done by JavaScript. JavaScript was never meant to be used as a scraper in the first place. It's similar to creating a rollercoaster game in an Excel sheet. 📉 📉 📉

How much will scraping with Fast Scraper will cost you?

I did a benchmark where I scraped with max_concurency=50, 128 MB RAM and 1000 (52MB) csfd.cz pages the whole page and it cost me 0.026 USD and ran for 60 s. So it is very cheap. That would make roughly 38 500 (2GB) scraped websites for $1.

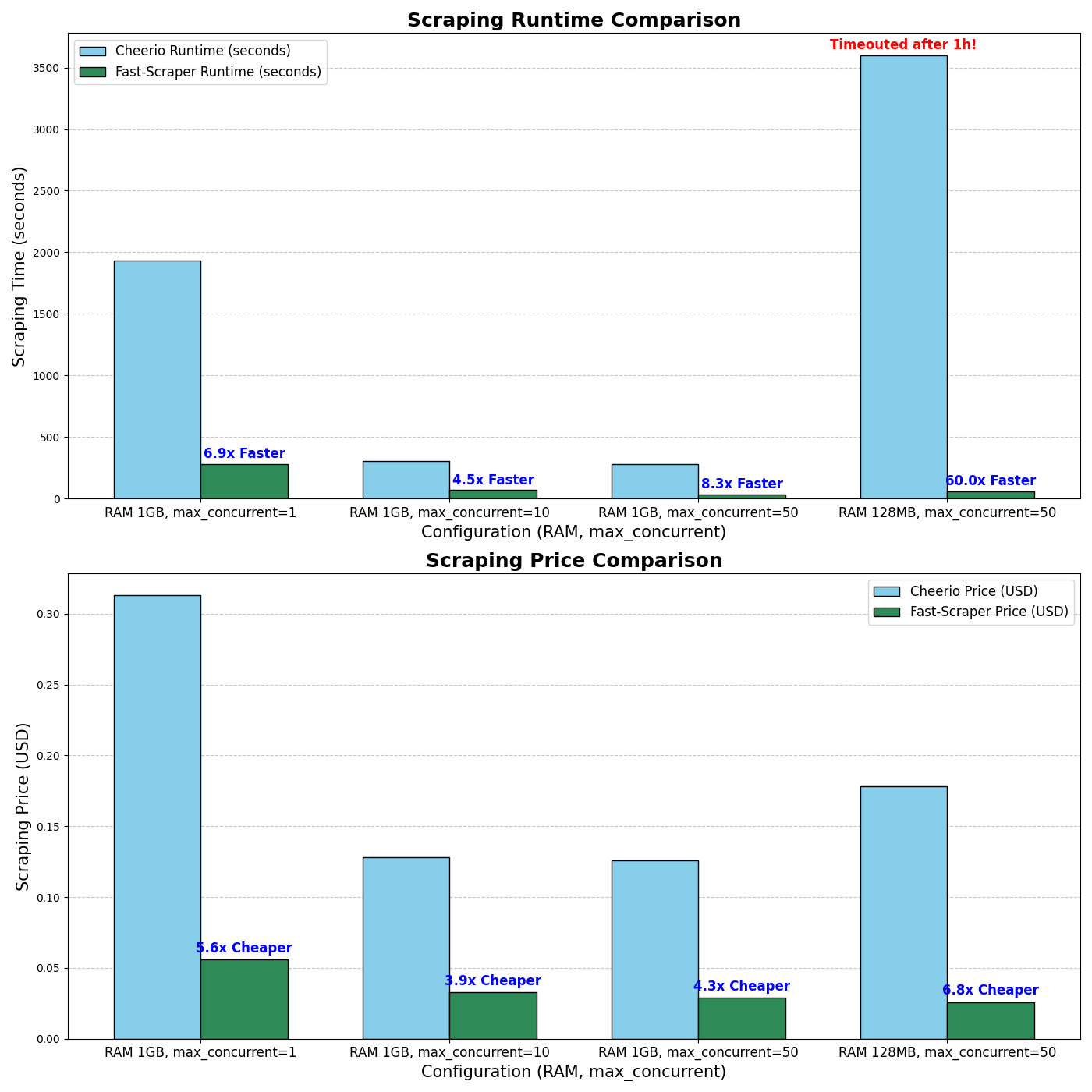

How much cheaper and faster?

Here is a comparison performed on 1,000 csfd.cz pages. The entire static HTML was scraped and stored in storage. With Cheerio, using 128 MB of RAM, the process timed out after 3,600 seconds because the scraper actor required more RAM. On the other hand, Fast Scraper only needed an average of 33.2 MB of RAM and 0.88% CPU usage. It's extremely light and fast. At this moment, the bottleneck is probably Docker itself.

Input parameters

Example of an input

1{ 2 "requests": [ 3 { 4 "url": "https://www.scrapethissite.com/pages/simple/" 5 }, 6 { 7 "id": "forms", 8 "url": "https://www.scrapethissite.com/pages/simple/", 9 "extract": [ 10 { 11 "field_name": "extracted_html", 12 "selector": "#countries > div > div:nth-child(4) > div:nth-child(1)", 13 "extract_type": "HTML" 14 } 15 ] 16 }, 17 { 18 "id": "hockey", 19 "url": "https://www.scrapethissite.com/pages/forms/", 20 "extract": [ 21 { 22 "field_name": "year1", 23 "selector": "#hockey > div > table > tbody > tr:nth-child(2) > td.year", 24 "extract_type": "Text" 25 }, 26 { 27 "field_name": "year2", 28 "selector": "#hockey > div > table > tbody > tr:nth-child(3) > td.year", 29 "extract_type": "Text" 30 }, 31 { 32 "field_name": "class_name", 33 "selector": "#hockey > div > table > tbody > tr:nth-child(2) > td.year", 34 "extract_type": { 35 "Attribute": "class" 36 } 37 } 38 ] 39 } 40 ], 41 "user_agent": "ApifyFastScraper/1.0", 42 "force_cloud": false, 43 "push_data_size": 500, 44 "max_concurrency": 10, 45 "max_request_retries": 3, 46 "max_request_retry_timeout_ms": 10000, 47 "request_retry_wait_ms": 5000 48}

Breaking Down the Configuration

- Requests: You'll list multiple web pages to be scraped. Each web page entry (request) will need the following:

- url: The URL of the web page to scrape.

- id (optional): Unique identifier for the request.

- extract (optional): List of fields to extract from the page. Each field will have a:

- field_name: A name to identify the extracted data.

- selector: CSS selector to pinpoint the HTML element containing the data.

- extract_type: Type of extraction (Text, HTML, or an attribute like class).

- Headers (optional): You can set additional HTTP headers globally or for individual requests. Global headers will be replaced with the request headers.

- Example Global Header: { "Accept": "application/json" }

- Example Request-specific Header: { "Accept-Language": "en-US" }

- User-Agent (optional): Specify the user-agent string your scraper will use globally or for individual requests. Global user agent will be replaced with the request user agent. This helps mimic different web browsers.

- Advanced Options (optional):

- force_cloud: Whether to force the scraper to run in a cloud environment.

- push_data_size: Max size of data chunks to push. Smaller value will ensure that you can offload the data into storage in smaller chunks.

- max_concurrency: Max number of concurrent requests.

- max_request_retries: Number of retries if a request fails.

- max_request_retry_timeout_ms: Max time to wait before retrying a request.

- request_retry_wait_ms: Waiting time between retries.

Example of Output

1[ 2 { 3 "id": "hockey", 4 "url": "https://www.scrapethissite.com/pages/forms/", 5 "data": { 6 "year2": "

1990

", 7 "class_name": "year", 8 "year1": "

1990

" 9 } 10 }, 11 { 12 "id": "9a2c62e1-79b0-4081-8db8-7d8cf549d4af", 13 "url": "https://www.scrapethissite.com/pages/simple/", 14 "data": { 15 "full_html": "<!doctype html>

<html lang="en">the rest of html</html>" 16 } 17 }, 18 { 19 "id": "forms", 20 "url": "https://www.scrapethissite.com/pages/simple/", 21 "data": { 22 "extracted_html": "

<h3 class="country-name">

<i class="flag-icon flag-icon-ad"></i>

Andorra

</h3>

<div class="country-info">

<strong>Capital:</strong> <span class="country-capital">Andorra la Vella</span><br>

<strong>Population:</strong> <span class="country-population">84000</span><br>

<strong>Area (km<sup>2</sup>):</strong> <span class="country-area">468.0</span><br>

</div>

" 23 } 24 } 25]

Your feedback

I am always working on improving the performance of my Actors. So if you’ve got any technical feedback for Fast Scraper or simply found a bug, please create an issue on the Actor’s Issues tab in Apify Console.

Frequently Asked Questions

Is it legal to scrape job listings or public data?

Yes, if you're scraping publicly available data for personal or internal use. Always review Websute's Terms of Service before large-scale use or redistribution.

Do I need to code to use this scraper?

No. This is a no-code tool — just enter a job title, location, and run the scraper directly from your dashboard or Apify actor page.

What data does it extract?

It extracts job titles, companies, salaries (if available), descriptions, locations, and post dates. You can export all of it to Excel or JSON.

Can I scrape multiple pages or filter by location?

Yes, you can scrape multiple pages and refine by job title, location, keyword, or more depending on the input settings you use.

How do I get started?

You can use the Try Now button on this page to go to the scraper. You’ll be guided to input a search term and get structured results. No setup needed!