GitHub Issue Notifier

Github Issue Notifier is built to watch the selected GitHub repositories for new issues containing at least one of the selected keywords. The discovered issues are output into the default dataset and, if configured, sent as Slack messages. Finds all existing issues on first run and only new ones since the last search on subsequent runs. Issue edits are not accounted for.

Input

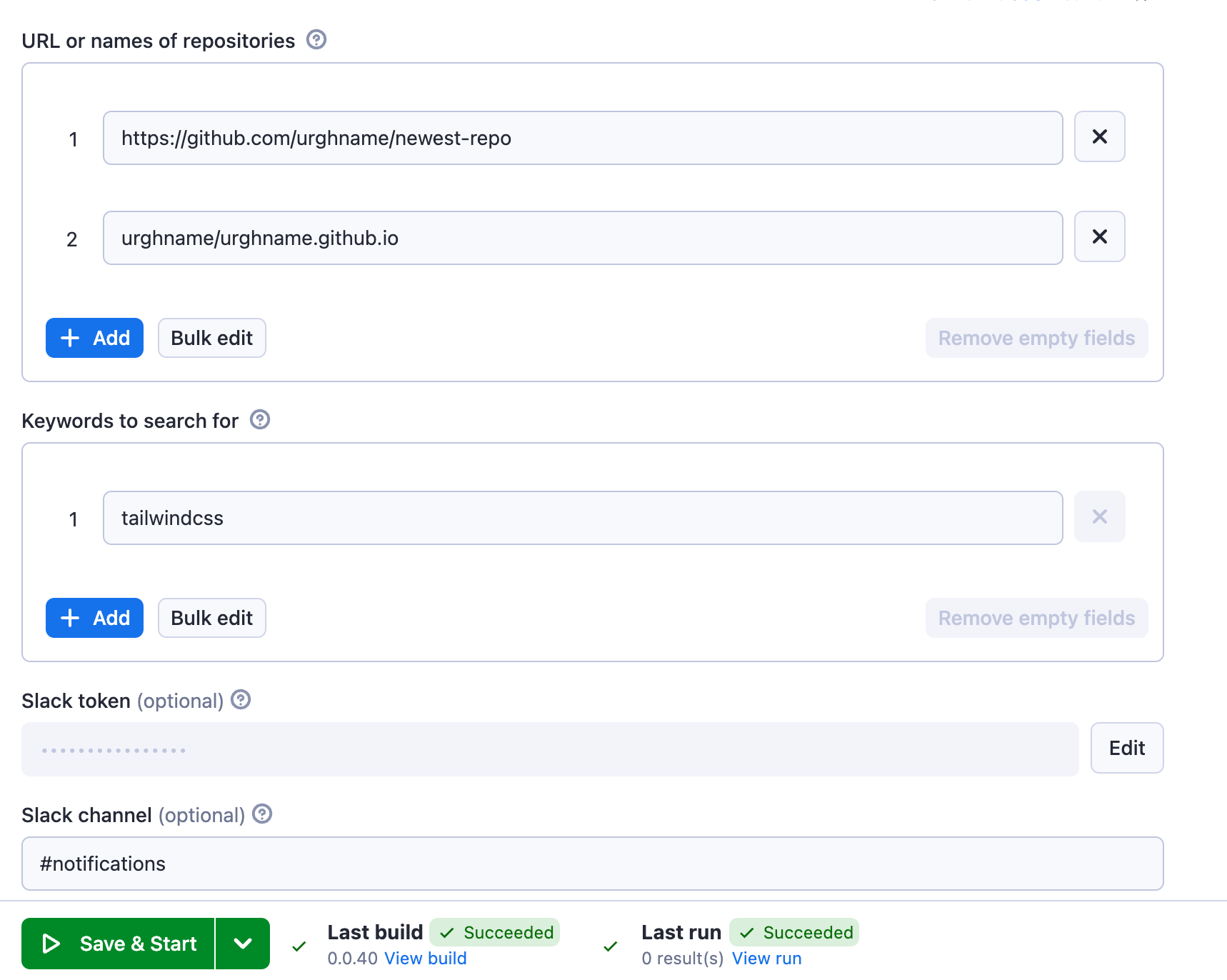

To run this Actor, you'll need at least one Github repository and a Slack API token. You can get the token by following the instructions on the Slack tutorials page. Here's the full input that you'll need:

- URLs or repository names of repositories you want to monitor.

- Keywords that will be looked for in the titles and bodies of new issues. Thje search is not case-sensitive.

- Slack channel where the notification with Github issues information will be sent (e.g. #notifications).

- Slack API token in a format xoxp-xxxxxxxxx-xxxx.

Output

Each run of this Actor outputs an array of new issues. The issue objects are what GitHub's Octokit API returns, see more at their docs.

How to run Github Issue Notifier

- Get a free Apify account.

- Create a new task.

- Modify the Actor input configuration as needed: add GitHub repository names, keywords, Slack API token and channel.

- Click the Start button.

- Get your notified in the respective channel whenever an event in GitHub repository occurs.

Want to automate Slack notifications or track GitHub issues?

You can use the simple automation tools below. Some of them are built for relevant Slack notification case that you can set up for GitHub, Toggl, or other platforms. Some of them are built specifically for GitHub. Feel free to browse them:

| 💌 Slack Message Generator | 👀 Slack Messages Downloader |

| ⚠️ Github Issues Tracker | 🏅 Github Champion Scraper |

Frequently Asked Questions

Is it legal to scrape job listings or public data?

Yes, if you're scraping publicly available data for personal or internal use. Always review Websute's Terms of Service before large-scale use or redistribution.

Do I need to code to use this scraper?

No. This is a no-code tool — just enter a job title, location, and run the scraper directly from your dashboard or Apify actor page.

What data does it extract?

It extracts job titles, companies, salaries (if available), descriptions, locations, and post dates. You can export all of it to Excel or JSON.

Can I scrape multiple pages or filter by location?

Yes, you can scrape multiple pages and refine by job title, location, keyword, or more depending on the input settings you use.

How do I get started?

You can use the Try Now button on this page to go to the scraper. You’ll be guided to input a search term and get structured results. No setup needed!